How App Store Rankings Actually Work (Based on Patterns, Not Claims)

If you’ve ever watched an app climb the App Store rankings and thought, why is this moving now? — you’re not alone.

Developers notice it.

Marketers notice it.

Even casual users notice it when apps suddenly appear, then slowly disappear again.

The strange part is that most explanations don’t line up with what people actually see.

An app gets great reviews but doesn’t move.

Another app spikes with very few reviews.

A small update causes a ranking jump days later.

A featured app doesn’t rank any better for keywords.

This guide was created to document those moments.

Not as isolated events, but as patterns.

Instead of asking “how does the App Store ranking algorithm work,” this guide asks a more practical question:

What tends to happen before rankings change, and what tends to happen after?

Everything here is based on observed behavior across real App Store listings over time. Rankings before and after launches. Before and after updates. Before and after review bursts. Across competitive and non-competitive categories.

No single signal explains rankings.

But certain signals show up more often than others.

And those patterns are surprisingly consistent.

This isn’t a guide you read once and forget. It’s meant to be a reference. Something you come back to when rankings don’t make sense, and you want a clearer mental model of what’s probably happening behind the scenes.

If you care about how the App Store behaves, not just what it claims, this is where to start.

If you need a quick summary of how App Store rankings tend to behave, without context or caveats, this is it.

- App Store rankings respond more to recent change than long-term consistency

- Momentum matters more than total lifetime downloads

- Review timing and clustering matter more than review volume

- App updates often trigger re-evaluation, not automatic improvement

- Rankings move in cycles, not continuously

- Category competition heavily shapes outcomes

- Featuring increases visibility, not rankings directly

- Rankings are relative, not absolute

- Most ranking movement reflects combined signals, not single actions

1. What This Guide Is — and What It Isn’t

Before getting into rankings, it’s important to be clear about what kind of guide this is.

App Store rankings are one of those topics where assumptions spread faster than evidence. Over time, a lot of advice has turned into “rules” simply because it gets repeated often enough.

This section exists to set expectations.

1.1 What This Guide Is

This guide is a pattern-based analysis of how App Store rankings behave over time.

It looks at what tends to happen before rankings move, while they move, and after they settle again. The focus isn’t on individual success stories, but on repeated behavior seen across different apps, categories, and situations.

More specifically, this guide is:

- Based on observable ranking movement, not official explanations

- Focused on correlation, not guaranteed causes

- Built from real App Store listings, not theoretical models

- Interested in how rankings react to change, not how to manipulate them

The goal is to help you form a realistic mental model of how rankings behave, especially when they seem inconsistent or delayed.

1.2 What This Guide Is Not

This guide intentionally avoids a few common approaches.

It is not an ASO checklist. There are no steps to follow, no “do this and rank higher” promises, and no quick wins outlined here.

It is not based on insider information. There is no access to Apple’s internal systems, ranking formulas, or weighting rules.

It is not built around paid ASO tool data being treated as fact. Tools can be useful for tracking, but they often turn estimates into certainty, which is where confusion starts.

And it is not claiming that these patterns will apply in every situation. App Store rankings are influenced by many variables at once, and exceptions always exist.

What this guide aims to do is simpler, and more useful long-term:

To document what the App Store consistently appears to respond to, and what it consistently seems to ignore.

1.3 How to Use This Guide

This isn’t a guide you rush through.

Some sections will feel obvious. Others may challenge things you’ve heard before. The most value comes from reading it with a specific situation in mind — a launch, an update, a ranking drop, or a sudden spike that didn’t make sense at the time.

Think of this as a reference, not a playbook.

When rankings move and the usual explanations don’t add up, this guide is meant to help you reason through why that might be happening.

Read more: Why Almost Every App Pushes Subscriptions Now

2. Methodology: How These Patterns Were Observed

This guide is based on observation, not access.

There’s no single dataset behind it, no internal metrics, and no claim of knowing how Apple’s systems are built. Instead, it comes from repeatedly watching how App Store rankings change over time, then comparing those changes across different situations.

The goal of this section isn’t to sound impressive. It’s to explain, as clearly as possible, what was looked at, what was compared, and what was intentionally left out.

2.1 Scope of Observation

The observations in this guide come from tracking ranking behavior across a wide range of App Store listings.

This includes apps that are:

- New launches and long-established

- Free and paid

- Highly ranked and barely visible

- In crowded categories and low-competition niches

Rather than focusing on a single category or app type, the goal was to notice what stayed consistent even when everything else changed.

If a pattern only appeared in one niche, it was treated cautiously. Patterns that showed up across different categories and app sizes were given more weight.

2.2 What Was Actively Observed

The following elements were watched over time and compared against ranking movement:

- Keyword ranking changes

- Category chart position changes

- Sudden increases or drops in visibility

- Timing of app updates

- Timing and clustering of user reviews

Importantly, these weren’t treated as isolated signals. The focus was on timing and sequence — what happened first, what followed, and how long the effect lasted.

For example, when an app update went live, rankings were not checked once and forgotten. They were checked over days and weeks to see if movement was immediate, delayed, or temporary.

2.3 What Was Compared

Patterns only start to matter when you have something to compare them against.

In practice, that meant looking at:

- Apps that launched with strong momentum vs apps that grew slowly

- Apps that received review bursts vs apps with steady review trickles

- Apps that updated frequently vs apps that stayed unchanged

- Apps in similar categories behaving differently under similar conditions

When two apps behaved differently despite similar surface-level metrics, that difference often revealed more than cases where everything moved as expected.

2.4 What Was Intentionally Excluded

Just as important as what was included is what was left out.

This guide does not rely on:

- Private or undocumented APIs

- Apple’s internal ranking weights

- Claims from ASO tools presented as fact

- One-off case studies treated as universal rules

Paid tools can be helpful for tracking trends, but they often simplify complex systems into clean numbers. For the purpose of this guide, rankings themselves were treated as the primary signal, not third-party estimates.

2.5 How Patterns Were Treated

Not every observation made it into this guide.

For a pattern to be included, it had to meet a simple standard:

It needed to appear more than once, across different apps, in different contexts.

Single examples were treated as anecdotes. Repeated behavior was treated as a pattern. Even then, patterns were framed as tendencies, not guarantees.

Where outcomes varied, that variation is noted. Where behavior was consistent, it’s described carefully, without assuming causation.

2.6 Why This Approach Was Chosen

The App Store is a closed system. Exact answers aren’t available from the outside.

In that kind of environment, the most reliable way to understand behavior isn’t to look for formulas — it’s to watch outcomes, compare them, and stay honest about uncertainty.

This methodology won’t produce shortcuts.

But it does produce explanations that match what people actually see.

And for understanding App Store rankings, that turns out to be far more useful.

3. A Simple Mental Model of App Store Rankings

Before looking at individual signals, it helps to understand how App Store rankings behave at a high level.

Not how they’re calculated.

Not how Apple describes them.

But how they tend to respond over time.

Once this mental model is clear, most ranking movements stop feeling random.

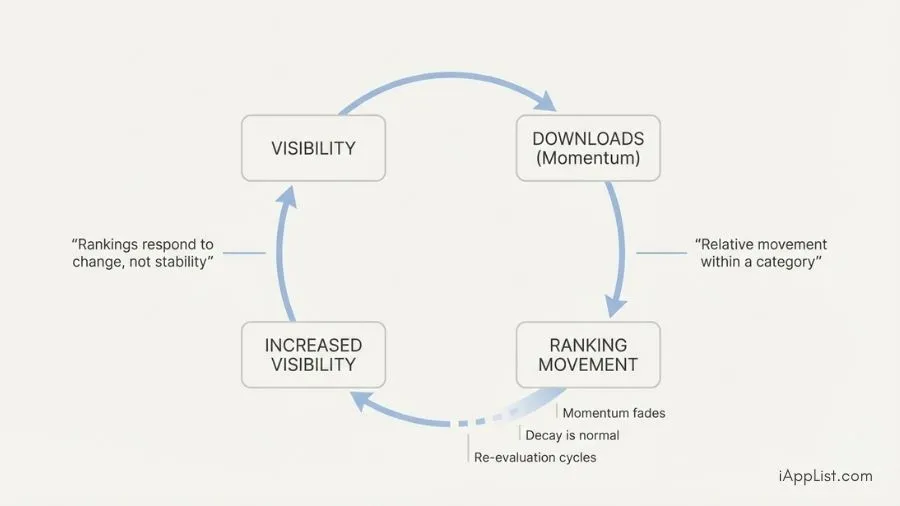

3.1 Rankings Respond to Change, Not Stability

One of the most consistent patterns is that App Store rankings react far more strongly to change than to steady performance.

An app that performs well but doesn’t change much often holds its position or slowly drifts. An app that suddenly changes — in visibility, downloads, or user activity — is far more likely to trigger noticeable movement.

This is why:

- New launches spike, then settle

- Viral moments cause fast climbs, followed by drops

- Updates often lead to temporary reshuffling

The ranking system appears to be tuned to detect movement, not to reward long-term consistency on its own.

Consistency matters, but it matters more for maintaining a baseline than for driving upward motion.

3.2 The Feedback Loop That Drives Most Ranking Movement

Most ranking changes can be understood as part of a simple loop:

Visibility → Downloads → Ranking → More Visibility

When something increases visibility — a launch, a feature mention, outside traffic — downloads tend to follow. That increase in downloads pushes rankings higher, which creates even more visibility, reinforcing the cycle.

The loop is powerful, but it isn’t permanent.

Over time, the initial source of momentum fades. Downloads slow. Rankings stop climbing. Eventually, the app settles into a new, lower equilibrium.

This is not failure. It’s normalization.

Understanding this loop explains why rankings often feel exciting at first and disappointing later, even when nothing “went wrong.”

3.3 Why Ranking Movement Is Often Delayed

Another common source of confusion is timing.

Ranking changes don’t always happen immediately after something changes. Updates go live, reviews appear, downloads increase — and rankings move days later, not hours later.

This suggests that the App Store evaluates apps in intervals, not in real time. Signals appear to be aggregated, reassessed, and then reflected in rankings after a delay.

Because of this, cause and effect often feel disconnected. Something happens, nothing changes, and then movement appears later, when attention has already shifted.

This delay is one reason people misattribute ranking changes to the wrong actions.

3.4 Why Rankings Feel Unstable — Even When Nothing Changed

From the outside, rankings can look chaotic.

Apps move up without obvious reasons. Others drop despite steady performance. But many of these shifts aren’t caused by the app itself.

They’re caused by relative change.

If competing apps in the same category suddenly gain momentum, even a stable app can fall. If competitors slow down, a stable app can rise without doing anything new.

Rankings are not absolute scores. They’re relative positions in a moving environment.

Once this is understood, ranking volatility starts to make sense.

3.5 How to Use This Mental Model Going Forward

Everything in the next sections builds on this idea:

Rankings respond to momentum, relative change, and re-evaluation cycles — not isolated metrics.

When a ranking moves, the most useful question isn’t what did we do?

It’s what changed around this app, and when?

Keeping that question in mind makes the patterns in the following sections much easier to interpret.

4. Signals That Consistently Correlate With Ranking Movement

There is no single signal that explains App Store rankings.

But when rankings move in noticeable ways, certain signals tend to appear more often than others. Not always. Not in isolation. But frequently enough to stand out when looking across different apps and categories.

This section focuses on those signals.

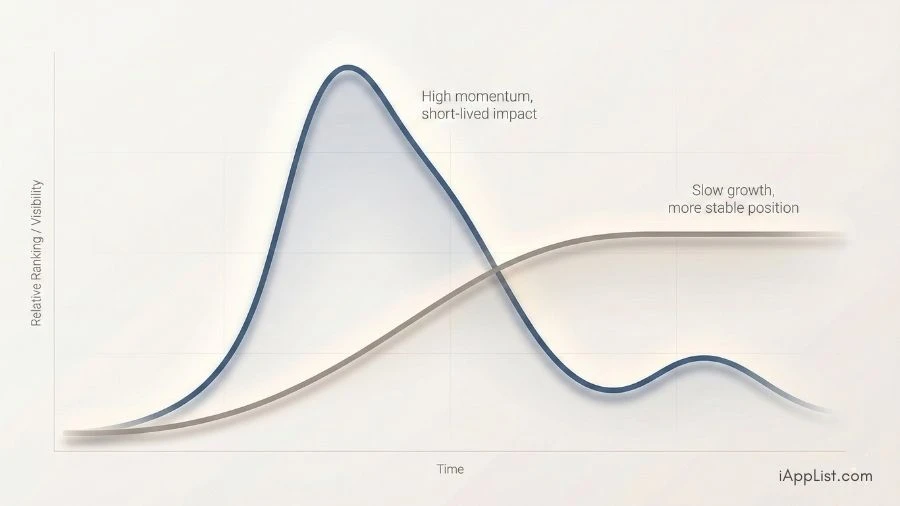

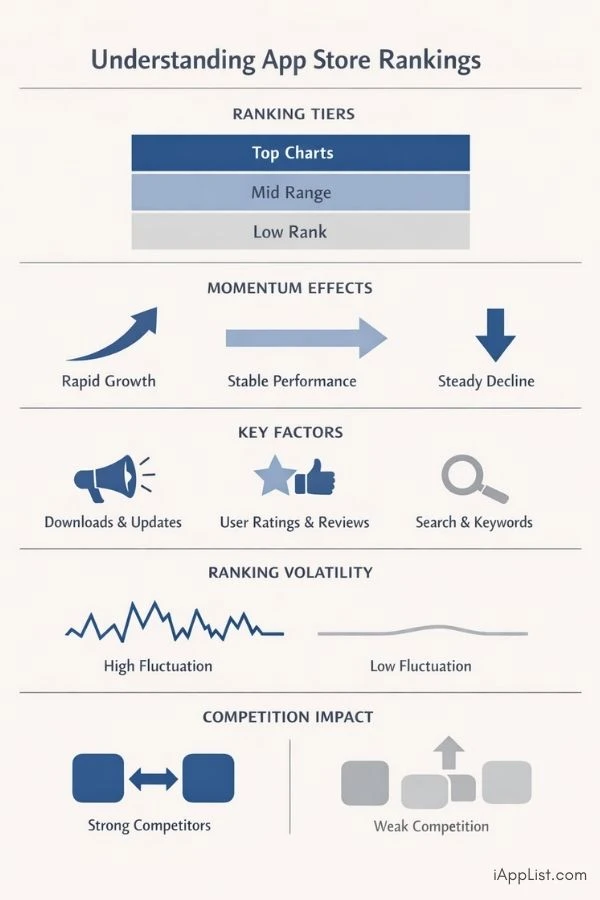

4.1 Download Velocity (Not Total Downloads)

One of the clearest patterns is that how fast downloads increase matters more than how many downloads an app has accumulated overall.

Apps with large lifetime download counts often remain stable but stagnant. Apps with smaller totals but sudden spikes tend to move more aggressively in rankings.

This helps explain why:

- New apps can outrank older, well-known apps

- Rankings jump sharply after short bursts of attention

- Long-term growth feels slower than expected

Ranking movement appears to respond to recent acceleration, not historical success. Once that acceleration fades, rankings tend to soften as well.

4.2 Short-Term User Activity Bursts

Closely related to download velocity is the idea of activity bursts.

These are short periods where user interest increases noticeably over a compressed time frame. They often come from:

- Launches

- Mentions outside the App Store

- Temporary promotions

- Viral moments

What matters is not just that activity increased, but that it increased quickly.

Sustained, flat activity tends to maintain rankings. Sudden change tends to trigger re-evaluation.

4.3 Review Timing and Clustering

Reviews do correlate with ranking movement, but not in the way they’re usually described.

The pattern that appears most often is not volume, but timing.

When reviews arrive in clusters — especially shortly after increased downloads — ranking movement is more likely to follow. Reviews spread thinly over long periods tend to have far less visible impact.

This helps explain why:

- A small number of recent reviews can coincide with ranking jumps

- Apps with thousands of old reviews don’t always move

- Star rating alone doesn’t predict ranking changes

Reviews seem to act more as confirmation of recent activity than as a standalone driver.

4.4 App Updates as Re-Evaluation Triggers

App updates frequently line up with ranking movement, even when the update itself is minor.

This suggests that updates may act as re-evaluation points, prompting the system to reassess the app using recent signals.

Common patterns include:

- Short-term ranking lifts after updates

- Temporary drops followed by recovery

- No change at all when there is no supporting momentum

Updates don’t appear to boost rankings on their own. They appear to amplify or reveal signals that already exist.

4.5 Combined Signals Matter More Than Isolated Ones

The strongest ranking movements tend to occur when multiple signals align.

For example:

- A download spike followed by review clustering

- An update coinciding with renewed visibility

- Increased activity in a relatively weak category

Single signals sometimes correlate with movement. Multiple signals almost always correlate more strongly.

This is one reason rankings feel inconsistent when looking at one metric at a time. The system appears to respond to patterns of behavior, not individual numbers.

4.6 Why These Signals Aren’t Guarantees

Even when these signals are present, rankings don’t always move as expected.

Category competition, timing, and external factors all play a role. What matters is not that these signals cause ranking changes, but that they tend to appear nearby in time when ranking changes happen.

Understanding that distinction is critical.

The goal isn’t to chase signals.

It’s to recognize them when rankings move, so the movement makes sense.

5. Signals That Matter — But Less Than People Think

Not every commonly discussed App Store signal is useless.

Some of them do matter. Just not in the direct, predictable way they’re often presented. This section focuses on signals that appear to have indirect or secondary influence, rather than being strong drivers on their own.

Understanding this helps avoid chasing changes that look productive but rarely move rankings.

5.1 App Description Keywords

App descriptions are frequently treated as a major ranking factor.

In practice, changes to description keywords alone rarely line up with noticeable ranking movement. Apps often remain in the same position before and after description edits, especially when nothing else changes.

This suggests that descriptions may play a supporting role — helping with clarity or conversion — rather than acting as a primary ranking driver.

Where keyword placement appears to matter more consistently is in higher-visibility fields, such as the app’s title and subtitle. Descriptions seem to have diminishing returns beyond that.

5.2 Screenshot Quality and Visual Presentation

High-quality screenshots clearly matter for user experience.

They help explain the app, set expectations, and influence whether someone chooses to download. What they don’t appear to do, on their own, is trigger ranking movement.

Better visuals can improve conversion, which can indirectly support ranking-related signals. But visual improvements without a change in user activity rarely correlate with ranking shifts.

In other words, screenshots help people decide. Rankings respond when people act.

5.3 App Age and History

There’s a common assumption that older apps are trusted more and therefore rank better.

In observed patterns, app age doesn’t reliably protect rankings or guarantee visibility. Older apps often maintain stable positions, but they don’t automatically outrank newer ones with stronger momentum.

History seems to matter most as a baseline. Momentum still determines movement.

This helps explain why long-established apps can slowly drift downward if newer competitors consistently generate more recent activity.

5.4 Frequent Micro-Updates

Some advice encourages frequent updates, even when little has changed.

Observed behavior suggests that updates can trigger re-evaluation, but only when they’re supported by other signals. Micro-updates without renewed user interest rarely lead to sustained ranking improvement.

In some cases, frequent updates appear to create noise without benefit. Rankings move briefly, then return to previous levels.

Updates seem to amplify momentum, not replace it.

5.5 Why These Signals Still Get Attention

These signals persist in ranking discussions because they are visible and controllable.

It’s easy to rewrite a description.

It’s easy to redesign screenshots.

It’s easy to push a small update.

It’s much harder to generate real changes in user behavior.

That imbalance makes secondary signals feel more powerful than they actually are. In reality, they tend to support stronger signals rather than drive ranking movement on their own.

6. What Appears to Matter Very Little (Despite Popular Belief)

Some App Store ranking ideas are repeated so often that they start to feel unquestionable.

But when you look at ranking movement over time, certain commonly cited factors show surprisingly weak correlation with meaningful changes. That doesn’t mean they’re useless. It means their influence is often overstated.

This section focuses on signals that rarely explain ranking movement on their own, even though they’re frequently mentioned.

6.1 Total Lifetime Downloads

Large download numbers look impressive, but they don’t consistently translate into ranking strength.

Apps with millions of lifetime downloads can remain flat or slowly decline, while smaller apps with sudden momentum can overtake them temporarily.

Observed patterns suggest that lifetime totals function more as historical context than as an active ranking driver. They don’t disappear, but they don’t seem to outweigh recent behavior.

This helps explain why rankings can feel harsh to established apps — past success doesn’t guarantee current visibility.

6.2 Old 5-Star Reviews

A high average rating built over years doesn’t reliably protect an app from ranking drops.

Older reviews appear to fade in influence over time. Apps with strong historical ratings but little recent activity often struggle to maintain momentum, while apps with fewer but recent reviews can move more quickly.

This suggests that recency matters more than volume. Reviews seem to reflect current interest, not just long-term satisfaction.

6.3 Frequent Micro-Updates Without Change

Updating an app frequently, without meaningful changes or renewed interest, rarely correlates with lasting ranking improvement.

In some cases, micro-updates line up with brief ranking movement. But without supporting signals — increased downloads, reviews, or visibility — rankings usually normalize quickly.

Updates appear to open a window for re-evaluation. If nothing new enters that window, little changes.

6.4 Isolated ASO Tweaks

Small, isolated tweaks — changing a keyword here, reordering screenshots there — often produce little to no visible ranking impact on their own.

When ranking movement does follow these changes, it’s often difficult to separate the tweak from other factors happening around the same time.

This is one reason many ranking “wins” are misattributed. The change that’s easiest to see is credited, even when the actual driver occurred elsewhere.

6.5 Why These Beliefs Persist

These ideas persist because they offer control.

They’re things developers and marketers can change without waiting for users to behave differently. That makes them appealing, even when evidence for their effectiveness is weak.

Ranking systems, however, tend to reward behavior, not effort.

Recognizing which actions rarely move rankings helps focus attention on signals that actually align with how the App Store responds.

7. Category Dynamics: The Overlooked Factor

App Store rankings don’t exist in isolation.

Every app competes inside a category, and that context quietly shapes how rankings behave. Two apps with similar performance can see very different results simply because the environment around them is different.

This is one of the most overlooked aspects of App Store behavior.

7.1 Why Category Competition Changes Everything

Rankings are relative.

An app doesn’t just need momentum — it needs more momentum than nearby competitors. In categories where many apps are actively growing at the same time, ranking movement becomes harder and more volatile.

In less competitive categories, smaller changes can have outsized effects.

This helps explain why:

- Average apps can rank well in weak categories

- Strong apps struggle to break through in crowded ones

- The same signal produces different outcomes depending on context

The App Store doesn’t evaluate apps in a vacuum. It evaluates them against whatever else is happening in that space.

7.2 Weak Categories vs Crowded Categories

In categories with limited competition, small bursts of activity often lead to noticeable ranking movement. A modest increase in downloads or reviews can shift positions quickly because there are fewer strong signals competing for attention.

In crowded categories, the opposite is true.

Even meaningful improvements can be absorbed by the noise. Rankings move, but the movement is slower, and gains are harder to maintain.

This creates the illusion that “nothing works,” when in reality, the same actions simply carry less weight in that environment.

7.3 Why Rankings Feel Harsher in Competitive Categories

In highly competitive categories, rankings tend to decay faster.

Momentum fades more quickly because competitors are constantly generating their own spikes. An app that pauses or slows down doesn’t just stop climbing — it often drops as others surge past it.

This creates a treadmill effect where maintaining position requires ongoing activity, not just occasional bursts.

Understanding this dynamic makes ranking drops feel less personal and more structural.

7.4 Category Switching: When It Helps and When It Hurts

Changing categories can dramatically alter ranking behavior, but it’s not a guaranteed improvement.

In some cases, moving to a less competitive category results in immediate visibility and higher rankings. In others, the app loses relevance and struggles to gain traction at all.

Observed patterns suggest category switching works best when:

- The app clearly fits the new category

- Competition is meaningfully lower

- User behavior aligns with category expectations

When those conditions aren’t met, switching categories can reset momentum without providing a new advantage.

7.5 Why Category Context Explains Many “Unfair” Rankings

Many ranking outcomes feel unfair because they’re evaluated without considering category context.

An app can do everything “right” and still lose ground if the surrounding environment shifts. Conversely, an app can do very little and rise simply because others slowed down.

Once category dynamics are factored in, many confusing ranking changes start to make sense.

8. Featured vs Ranked: Two Different Systems

Featured apps and ranked apps often get talked about as if they’re part of the same mechanism.

They aren’t.

While both affect visibility, they operate through different systems and respond to different signals. Confusing the two is one of the main reasons App Store behavior feels inconsistent.

8.1 What Featured Placement Actually Does

Featuring is an editorial decision.

When Apple features an app, it increases exposure through curated sections, collections, and stories. This visibility can drive downloads, but it doesn’t directly control where an app ranks for keywords or in category charts.

In practice, featured apps often see:

- Short-term spikes in attention

- Increased traffic from browsing, not search

- Temporary increases in downloads

What happens next depends on how users respond.

If the feature leads to sustained activity, rankings may improve. If attention fades quickly, rankings often return to previous levels.

Featuring opens a door. It doesn’t push the app through it.

8.2 How Ranked Visibility Works Differently

Ranking is algorithmic.

Search results and category charts appear to respond primarily to patterns of user behavior — downloads, momentum, and relative performance within a category.

An app can rank highly without ever being featured.

An app can be featured without ranking any higher.

The two systems overlap only indirectly, through user activity.

This is why some apps surge in rankings after being featured, while others barely move at all.

8.3 Why Featured Apps Sometimes Don’t Rank Better

When featured exposure doesn’t translate into ranking movement, it’s usually because:

- The surge in downloads is brief

- Reviews don’t cluster alongside the spike

- Category competition absorbs the momentum

In these cases, the feature creates visibility without creating lasting signals.

From the outside, this looks like the App Store “ignoring” the feature. In reality, the systems are simply responding to different inputs.

8.4 Why People Confuse Featuring With Ranking

Featuring is visible. Ranking is abstract.

It’s easy to notice when an app is highlighted by Apple. It’s harder to see the underlying behavior that affects rankings. When a ranking change follows a feature, the two are naturally linked, even when other factors played a larger role.

Over time, this reinforces the belief that being featured automatically boosts rankings, even though observed patterns show that the relationship is indirect at best.

8.5 How to Think About Featuring in Context

The most useful way to think about featuring is as a visibility amplifier, not a ranking signal.

Featuring increases exposure. What matters next is what users do with that exposure.

When featuring aligns with strong momentum, rankings often follow. When it doesn’t, the effect is usually temporary.

Understanding this distinction removes a lot of confusion around why some featured apps rise — and others don’t.

9. Patterns vs Myths (Quick Reference)

A lot of App Store advice sounds convincing because it’s simple. Over time, those simplifications turn into “rules,” even when observed behavior doesn’t consistently support them.

This table summarizes some of the most common claims about App Store rankings, alongside what recurring patterns suggest instead.

It’s not meant to dismiss these ideas entirely — only to place them in context.

| Common Claim | What Patterns Suggest |

|---|---|

| More reviews automatically improve rankings | Review timing and clustering matter more than total volume |

| High star ratings guarantee visibility | Ratings help trust, but don’t drive movement on their own |

| Keywords in the description boost rankings | Title and subtitle appear far more influential |

| Frequent updates always help rankings | Updates trigger re-evaluation, not guaranteed improvement |

| Big apps are protected from drops | Momentum fades regardless of app size |

| Featured apps rank higher by default | Featuring increases exposure, not ranking directly |

| Lifetime downloads matter most | Recent activity outweighs historical totals |

| Paid apps can’t rank well | They can, but often decay faster without momentum |

| ASO tweaks drive most ranking changes | User behavior aligns more closely with movement |

These aren’t hard rules.

Each “pattern” reflects behavior that appears repeatedly across different apps and categories. In isolation, none of these factors explains rankings completely. Together, they help form a more accurate mental model of how ranking movement tends to happen.

The key takeaway is simple:

Rankings follow behavior over time, not checklists.

10. Real-World Ranking Timelines (Conceptual Examples)

App Store rankings are easiest to understand when viewed over time.

Instead of focusing on individual signals, this section walks through common ranking timelines — not tied to specific apps, but based on repeated patterns seen across many listings.

These examples are simplified, but they reflect how rankings often behave in practice.

10.1 The Typical New App Launch Pattern

This is one of the most common timelines.

- The app launches with initial visibility

- Downloads increase quickly over a short period

- Rankings rise sharply

- Momentum slows

- Rankings gradually decline and stabilize

This pattern explains why early success can feel dramatic and short-lived. The initial spike reflects curiosity and novelty. The decline reflects normalization, not failure.

Most apps don’t return to zero. They settle into a new baseline that reflects ongoing interest.

10.2 The Viral or External Exposure Pattern

This pattern appears when attention comes from outside the App Store.

- An external mention or trend drives sudden interest

- Downloads surge quickly

- Rankings jump higher than expected

- Attention fades

- Rankings drop, often quickly

In some cases, the app stabilizes at a higher position than before. In others, it returns close to its original rank.

What matters is whether the exposure leads to continued use and reviews, not just downloads.

10.3 The Update-Triggered Re-Evaluation Pattern

This timeline often confuses developers.

- An app update goes live

- Rankings change shortly after — sometimes up, sometimes down

- Movement appears disconnected from the update itself

- Rankings normalize over time

The update acts as a checkpoint rather than a reward. If recent activity supports the app, rankings may improve. If not, movement is usually temporary.

10.4 The Slow-Burn Growth Pattern

This pattern is less common, but more stable.

- The app gains users gradually

- Activity increases slowly over time

- Rankings move in small increments

- Drops are smaller, recoveries are easier

Slow-burn growth rarely produces dramatic jumps, but it often leads to more durable positions. This is typically seen in categories with less volatility or steady user demand.

10.5 Why These Timelines Matter More Than Individual Metrics

Looking at rankings as timelines rather than snapshots helps avoid misinterpretation.

A single ranking change doesn’t tell you much. The shape of the movement does.

When viewed this way, many ranking changes stop feeling mysterious. They follow patterns that repeat, even when the underlying signals differ slightly.

Understanding the timeline you’re in is often more useful than chasing any single metric.

11. Why App Store Rankings Can’t Be Reverse-Engineered Perfectly

After looking at patterns, it’s natural to ask why App Store rankings still feel impossible to pin down completely.

The short answer is that they’re designed that way.

Not to confuse people, but to remain adaptable.

11.1 Why Apple Keeps Ranking Details Vague

Apple has never published a detailed breakdown of how App Store rankings work, and there’s a practical reason for that.

Ranking systems that are fully transparent are easier to manipulate. Once behaviors can be reliably gamed, the system stops reflecting genuine user interest.

By keeping weighting unclear and evaluation criteria flexible, Apple limits how predictable the system can become.

This doesn’t mean rankings are random. It means they’re resistant to simple formulas.

11.2 Why Exact Ranking Formulas Don’t Really Exist

It’s tempting to imagine a single algorithm with fixed weights: downloads count for X, reviews count for Y, updates count for Z.

Observed behavior suggests something more dynamic.

Signals appear to be reweighted depending on:

- Category competition

- Overall App Store activity

- Recent user behavior patterns

What matters at one moment may matter less at another. The system seems to adjust based on context rather than applying a static formula.

This is why two similar apps can behave differently at different times, even when nothing obvious changed.

11.3 Why Ranking Behavior Changes Over Time

Patterns don’t disappear, but their impact shifts.

A signal that once triggered strong movement may become less influential as the ecosystem evolves. New categories emerge, user behavior changes, and Apple adapts how visibility is distributed.

This is also why older ranking advice often feels outdated. It wasn’t necessarily wrong at the time — it just stopped matching reality.

11.4 Why Pattern-Based Understanding Still Works

Even though formulas change, behavior tends to repeat.

Users still download, review, update, and lose interest in similar ways. Competition still rises and falls. Momentum still fades.

By focusing on how rankings respond to these recurring behaviors, rather than trying to decode internal mechanics, it becomes possible to understand ranking movement without needing exact rules.

Patterns aren’t permanent truths.

But they’re far more reliable than guesses.

11.5 What This Means for Interpreting Rankings

The most productive way to approach App Store rankings isn’t to look for certainty.

It’s to look for signals that align in time, relative movement, and contextual change.

When rankings move, they’re usually responding to something real — even if that “something” isn’t immediately obvious.

Understanding that makes rankings less frustrating, and far more predictable than they first appear.

12. Limitations & What This Guide Can’t Claim

No matter how carefully patterns are observed, there are limits to what can be known from the outside.

This section exists to make those limits explicit — not to weaken the guide, but to keep it accurate over time.

12.1 Correlation Is Not Causation

This guide documents patterns that appear around ranking movement. It does not claim that any single signal directly causes rankings to change.

In many cases, multiple things happen close together. Rankings respond to a combination of factors, some of which are invisible from the outside.

Patterns help explain behavior. They don’t prove mechanisms.

12.2 Not Every App Behaves the Same Way

Apps exist in different categories, markets, and usage contexts.

A pattern that appears frequently in one category may appear less clearly in another. Some apps behave as outliers due to unique user behavior, niche demand, or external exposure.

This guide focuses on what appears most often, not what happens every time.

12.3 Rankings Can Change Without an Obvious Trigger

There are times when rankings move, and no clear external change can be identified.

This doesn’t mean the movement is random. It likely means the relevant signal isn’t visible — delayed aggregation, competitor behavior, or internal adjustments can all play a role.

From the outside, not every cause can be traced.

12.4 Apple Can Change Weighting Without Notice

The App Store is not static.

Apple can adjust how signals are interpreted, how often apps are re-evaluated, or how competition is balanced across categories. These changes aren’t announced and aren’t documented publicly.

This means patterns can weaken or shift over time.

12.5 Why These Limitations Don’t Make the Guide Useless

Even with these constraints, behavior still repeats.

User interest still rises and falls. Momentum still matters. Competition still shapes outcomes. Those fundamentals don’t disappear when internal systems change.

The purpose of this guide isn’t to offer certainty. It’s to offer context.

And in a system as opaque as the App Store, context is often the most reliable tool available.

Closing Thoughts

App Store rankings aren’t a puzzle waiting to be solved. They’re a system reacting to human behavior at scale.

That’s why they feel inconsistent, delayed, and sometimes unfair. Not because nothing makes sense — but because the signals that matter aren’t always the ones that are easiest to measure.

This guide doesn’t claim to decode the App Store. It documents how rankings tend to behave when real apps meet real users over time. Those patterns won’t predict every outcome, but they do explain most of what people actually see.

When rankings move, something usually changed. When they don’t, something usually didn’t.

Understanding that difference turns confusion into context — and context is often the most useful insight available.